Investigate and Correct CVEs with the K8s API

Detecting and understanding the impact of CVEs on your K8s cluster using Styra

Note: The exploit used for the particular vulnerability used as an example in this blog has cropped up again! Now updated to CVE-2019-11246, it appears the previous patch didn’t fully solve the issue, and exploits remain successful in the wild. This just underscores the need to programmatically ensure that you aren’t vulnerable to these types of exploits in your K8s environment! Read on to see how to address this and other CVEs even before patches are released…

When NIST (https://nvd.nist.go) announces a new CVE (Common Vulnerability and Exposure) that impacts Kubernetes, kube administrators and IT Security teams need to quickly understand the impact of the vulnerability and protect their Kubernetes clusters. Often, no patches are yet available, so in addition to understanding the impact, DevOps teams have to decide whether or not to create a custom fix to mitigate the risk of that CVE without bringing down the entire app or system.

This process looks basically like this:

1. Scrape the kube api-server audit logs to find any evidence of the CVE

2. Notify users of the potential vulnerability

3. Decide whether to write and implement a temporary fix yourself, or…

4. Wait to upgrade the cluster once a fix is released and hope it’s not a problem in the meantime.

This approach isn’t much different from what security teams have been doing forever – but it is both manual, and requires multiple steps. Kubernetes gives us tools to automate and simplify, based on desired state… So I wondered to myself, can we use modern tools and automation to simplify this process? (As you may have guessed, the answer is “Yes!”) In this blog I’ll focus on a recent k8s CVE (https://nvd.nist.gov/vuln/detail/CVE-2018-1002101) and show you how to eliminate the manual efforts of log scraping, user notification, and fix creation.

In the old world, each of the steps (analysis, notification, blocking) are all discrete, unrelated actions. When it comes to Kubernetes, however, Styra can complete all three steps with the same exact policy. With Styra deployed as a validating admission controller for Kubernetes, we can author policies, distribute policies, analyze policies, and communicate with other teams in the organization about those policies (what’s enforced, and why) and what policies will be enforced in the future.

This blog will use a particular vulnerability to showcase what I’m describing. This CVE was exposed in Kubernetes versions 1.9.0-1.9.9, 1.10.0-1.10.5, and 1.11.0-1.11.1, and it impacted the use of file copy kubectl cp command. The vulnerability leveraged the fact that a pod containing a malicious tar binary (used by kubectl cp to tar/untar the copied files) could traverse symbolic links and hence create or modify arbitrary files in the destination file system.

Note: The exploit used for this particular vulnerability has cropped up again—it appears the patch didn’t fully solve the issue, and exploits remain successful in the wild. This just underscores the need to programmatically ensure that you aren’t vulnerable to these types of exploits in your K8s environment!

Detect potential exploitations of the CVE

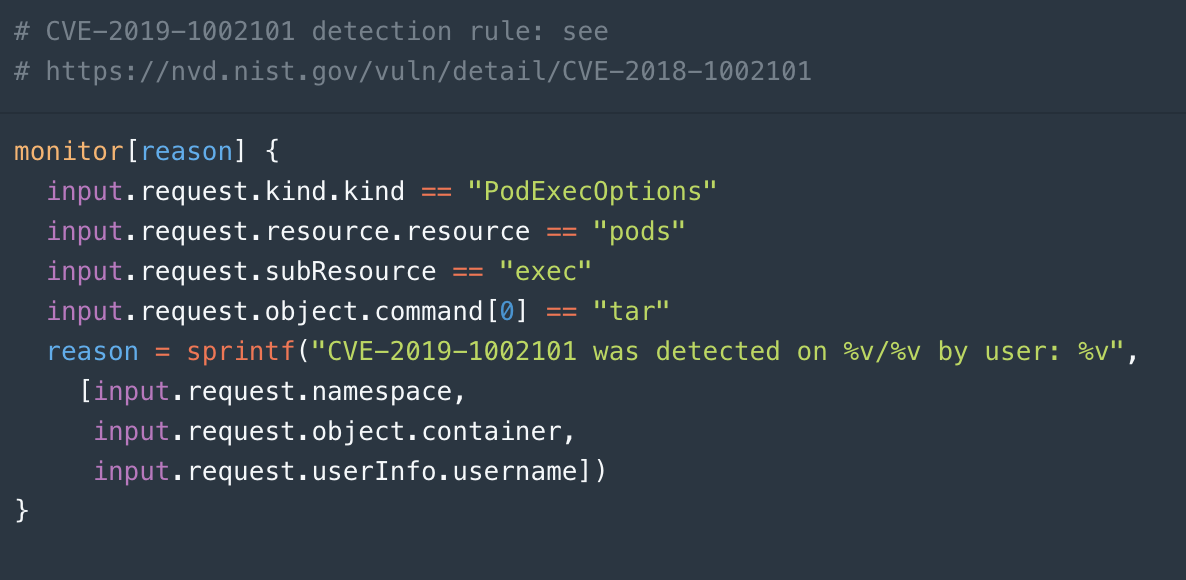

To detect and prevent potential exploits, it’s important to understand that the kubectl cp command is translated by kubectl to an `exec` operation that tars/untars the files inside the destination (or source) pod. Hence to detect the execution of the file copy operation, we just need to check all `exec` operations with a command `tar`.

To find all instances of that command with Styra, you write the policy shown below that says you want to monitor all “PodExecOptions” objects that apply to “pods” and are executing “exec tar”. You can make this policy more refined if you know more, but this is a good starting point.

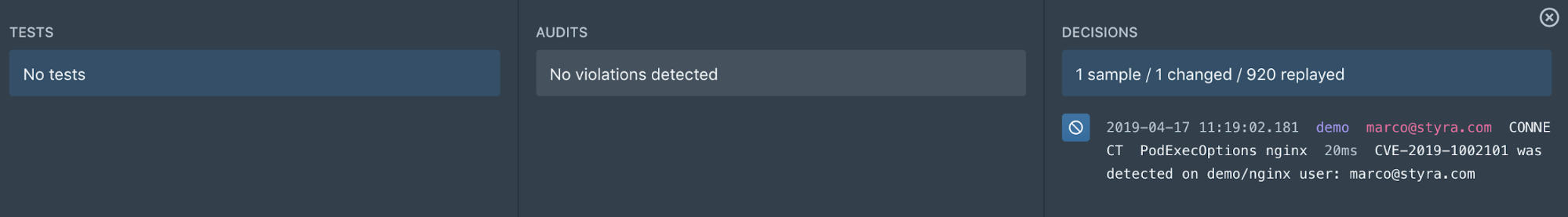

To find those commands that violate this policy, you can ask Styra to do impact analysis on this policy. One of the analyses it runs is to rerun all previous decisions that it’s logged against this new rule and find out if there are violations–log entries that were previously allowed but are denied by our new rule. The impact analysis correctly detects that the vulnerable command would have been denied by policy shown above.

We then need to analyze the results to determine whether any of them are actual exploits, what the proper steps are if they were, and whether there are legitimate uses of kubectl cp.

Patch K8s until the cluster can be updated

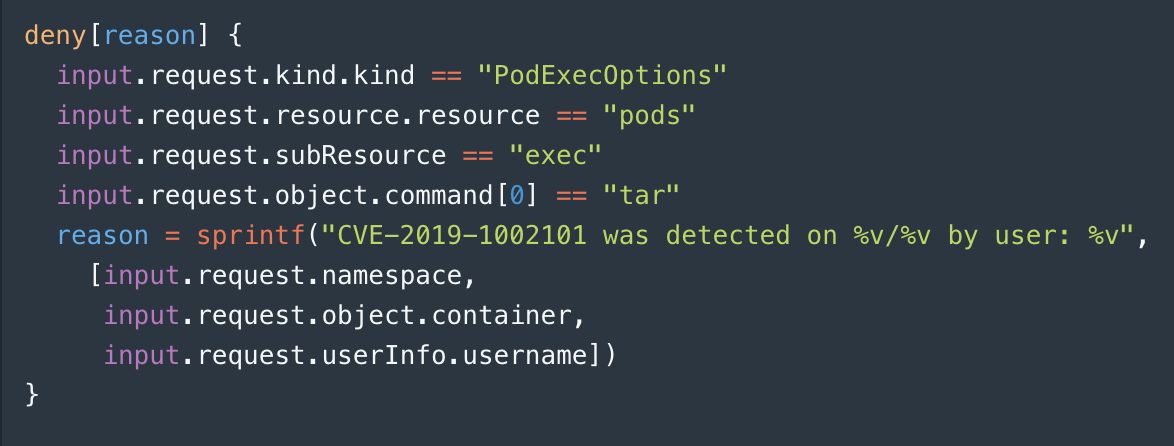

Using the results from the last section, you know whether or not there are legitimate workflows utilizing kubectl cp. If you want, you can choose to leave the policy in monitoring mode and set up alerts in case the vulnerability is exploited, so SecOps teams can act when necessary. Alternatively, you can instead to put the policy in enforce mode, which will block those requests from being executed entirely. This will prevent the exploit until a patch is made available and you have the time to perform the upgrade.

Going from monitoring to enforcing is simple—just change the head from

monitor[reason] { … }

to

deny[reason] { … }

and then publish that policy. (You can also publish the policy first to git, so it goes through peer review, if you prefer.)

Now you can verify that the policy is being enforced by running a kubectl cp command yourself and verifying the results.

$ kubectl cp nginx:/etc/hosts /tmp/hosts

Conclusion

Using a few lines of policy-as-code, we were able to detect, block, flag and evaluate the impact of a CVE without impacting the cluster operations. In this particular blog we chose a recent CVE that is simple to detect, but the power of Styra and the Rego language gives you the flexibility to detect and protect even more complicated CVEs.

Appendix: Configuration details

To make sure Styra has visibility into and control over all the relevant kubectl commands, the k8s validating webhook should be configured to send all CONNECT operations to Styra (CONNECT is the term Kubernetes uses for the exec requests to the admission controller). For example, the configuration below sends all operations for all resources to Styra:

rules:

- apiGroups:

- '*'

apiVersions:

- '*'

operations:

- '*'

resources:

- '*/*'